Byte-Pair Encoding Algorithm

The focus of the second issue of One Minute NLP is BPE, a popular tokenization algorithm which is used by most LLMs including GPT, Llama, and Mistral.

Byte-Pair Encoding Algorithm

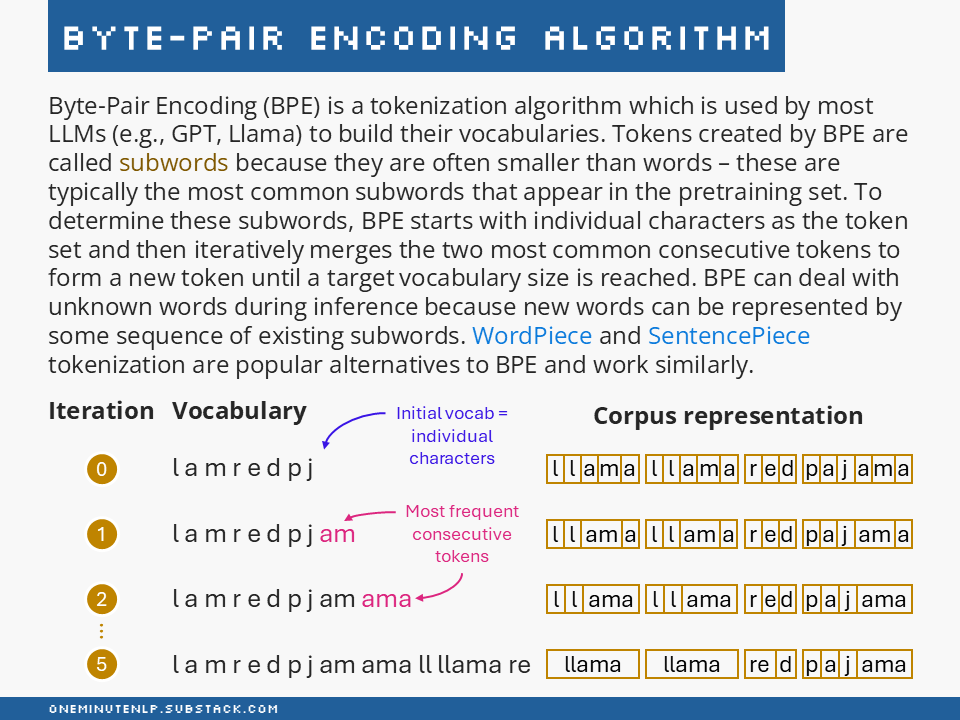

Byte-Pair Encoding (BPE) is a tokenization algorithm which is used by most LLMs (e.g., GPT, Llama) to build their vocabularies. Tokens created by BPE are called subwords because they are often smaller than words – these are typically the most common subwords that appear in the pretraining set. To determine these subwords, BPE starts with individual characters as the token set and then iteratively merges the two most common consecutive tokens to form a new token until a target vocabulary size is reached. BPE can deal with unknown words during inference because new words can be represented by some sequence of existing subwords. WordPiece and SentencePiece tokenization are popular alternatives to BPE and work similarly.

Consider an example corpus: “llama llama red pajama“.

In BPE tokenization, the starting vocabulary consists of individual characters. The corresponding corpus representation would look like this (dashes indicate token boundaries):

Iteration: 0

Vocabulary: l a m r e d p j

Corpus representation: l-l-a-m-a l-l-a-m-a r-e-d p-a-j-a-m-a

Vocabulary and corpus representation after the first iteration (a+m were the most frequent consecutive tokens and are merged into a new token am):

Iteration: 1

Vocabulary: l a m r e d p j am

Corpus representation: l-l-am-a l-l-am-a r-e-d p-a-j-am-a

Vocabulary and corpus representation after the second iteration:

Iteration: 2

Vocabulary: l a m r e d p j am ama

Corpus representation: l-l-ama l-l-ama r-e-d p-a-j-ama

Vocabulary and corpus representation after the fifth iteration:

Iteration: 5

Vocabulary: l a m r e d p j am ama ll llama re

Corpus representation: llama llama re-d p-a-j-ama

Further Reading

Neural Machine Translation of Rare Words with Subword Units by Sennrich et al. — This work was the first to introduce the BPE algorithm to NLP through an application in Neural Machine Translation.

Summary of the Tokenizers (Hugging Face docs) — this page provides a fantastic overview of different tokenization techniques including a detailed explanation of the BPE algorithm.

There are many implementations of BPE, three notable ones include the tiktoken library (used by OpenAI’s models), minbpe (Andrej Karpathy’s minimal implentation), and sentencepiece (Google’s implementation of BPE which, unlike the original implementation, doesn’t require input text to be split into words).

Do you want to learn more NLP concepts?

Each week I pick one core NLP concept and create a one-slide, one-minute explanation of the concept. To receive weekly new posts in your inbox, subscribe here:

Reach out to me:

Connect with me on LinkedIn

Read my technical blog on Medium

Or send me a message by responding to this post

Is there a concept you would like me to cover in a future issue? Let me know!