In-context learning

In-context learning is a prompting technique that allows LLMs to learn and adapt to new tasks based on examples provided within the input prompt, without requiring additional training or fine-tuning.

In-context learning

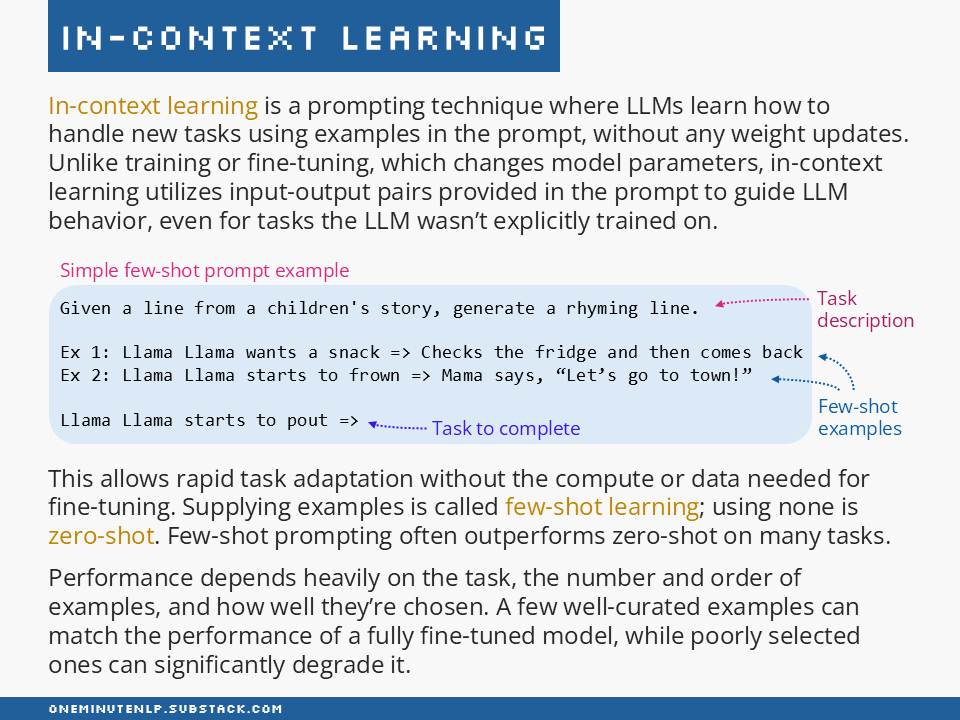

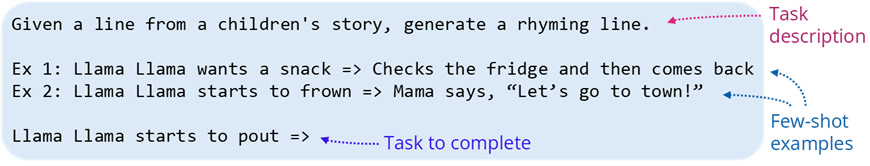

In-context learning is a prompting technique where LLMs learn how to handle new tasks using examples in the prompt, without any weight updates. Unlike training or fine-tuning, which changes model parameters, in-context learning utilizes input-output pairs provided in the prompt to guide LLM behavior, even for tasks the LLM wasn’t explicitly trained on.

This allows rapid task adaptation without the compute or data needed for fine-tuning. Supplying examples is called few-shot learning; using none is zero-shot. Few-shot prompting often outperforms zero-shot on many tasks.

Performance depends heavily on the task, the number and order of examples, and how well they’re chosen. A few well-curated examples can match the performance of a fully fine-tuned model, while poorly selected ones can significantly degrade it.

Further reading

Language Models are Few-Shot Learners by Brown et al. — This paper introduced GPT-3 and the concept of in-context learning.

Prompt Engineering by Lilian Weng — This fantastic article explains many prompt engineering techniques including few-shot prompting and provides tips for selecting good examples.

Practical Prompt Engineering by Cameron Wolfe — Drawing on lots of research articles, this deep dive covers many common prompting techniques including few-shot prompting.

Do you want to learn more NLP concepts?

Every week, I break down one key NLP or Generative AI concept into a single, easy-to-digest slide. No fluff—just the core idea, explained clearly, so you can stay sharp in just one minute a week.

Reach out to me:

Connect with me on LinkedIn

Read my technical blog on Medium

Or send me a message by responding to this post

Is there a concept you would like me to cover in a future issue? Let me know!