Perplexity

Welcome to the first issue of One Minute NLP! The focus of this issue is perplexity, a metric commonly used to evaluate language models.

Perplexity

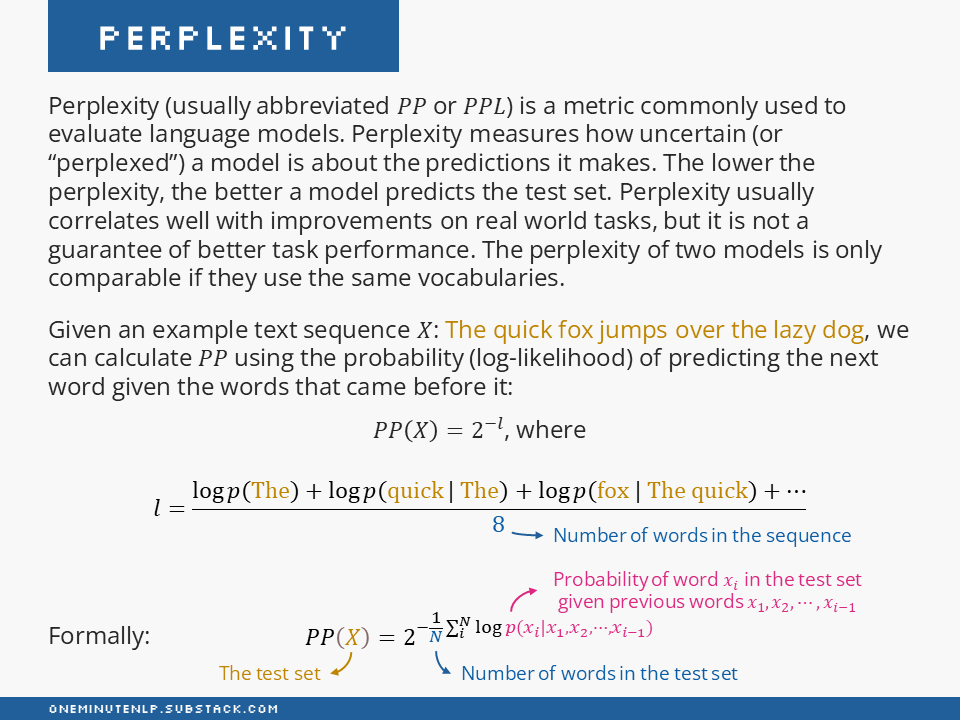

Perplexity (usually abbreviated PP or PPL) is a metric commonly used to evaluate language models. Perplexity measures how uncertain (or “perplexed”) a model is about the predictions it makes. The lower the perplexity, the better a model predicts the test set. Perplexity usually correlates well with improvements on real world tasks, but it is not a guarantee of better task performance. The perplexity of two models is only comparable if they use the same vocabularies.

Given an example text sequence X: The quick fox jumps over the lazy dog, we can calculate PP using the probability (log-likelihood) of predicting the next word given the words that came before it:

where

and N is the number of words in the sequence (N=8 for our test sequence).

Formally:

Further Reading

Speech and Language Processing by Jurafsky and Martin (free to read online) — Section 3.3 (Evaluating Language Models: Perplexity) provides a great explanation of the metric.

Lessons from the Trenches on Reproducible Evaluation of Language Models by Biderman et al. — this paper includes some practical considerations for using perplexity to compare language modeling performance of different models (Appendix A.3).

Perplexity of fixed-length models (Hugging Face docs) — how to calculate perplexity with the Hugging Face Transformers library.

Do you want to learn more NLP concepts?

Each week I pick one core NLP concept and create a one-slide, one-minute explanation of the concept. To receive weekly new posts in your inbox, subscribe here:

Reach out to me:

Connect with me on LinkedIn

Read my technical blog on Medium

Or send me a message by responding to this post

Is there a concept you would like me to cover in a future issue? Let me know!