ReAct Agent Model

ReAct (Reason + Act) is a design pattern for AI agents that incorporates planning and action execution. It has become a common way to implement agents.

ReAct Agent Model

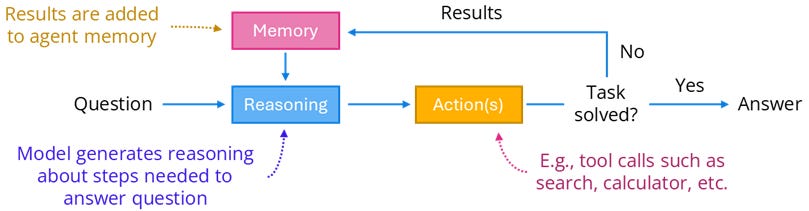

ReAct is an agent design pattern that incorporates reasoning (thinking through steps logically) and action (interacting with the environment). Prior prompting strategies either generate reasoning (e.g., chain-of-thought) or take actions (e.g., tool use) separately. In ReAct, an LLM is prompted to plan its next action(s), take action(s), and reflect on results – this is repeated until the agent considers a task complete. ReAct has been shown to perform well on complex tasks like multi-hop question answering and has become a common agent pattern built upon by other approaches like Reflexion.

ReAct may struggle if outcomes of actions it takes are incomplete or incorrect. It can have higher cost/latency than simpler methods due to more steps/tokens required. It has been shown to perform best with fine-tuning.

Further reading

ReAct: Synergizing Reasoning and Acting in Language Models by Yao et al. — This paper introduced the ReAct Agent Model. This blog post by the paper’s authors provides a concise summary of the paper.

Both Hugging Face Transformers and LangChain LangGraph provide implementations of ReAct. You can view the ReAct prompt used by LangChain in the LangChain Hub.

Agents by Chip Huyen — this fantastic blog post covers much more than just ReAct, but if you are looking to understand agents and where ReAct fits in, this is a great place to start.

Do you want to learn more NLP concepts?

Each week I pick one core NLP concept and create a one-slide, one-minute explanation of the concept. To receive weekly new posts in your inbox, subscribe here:

Reach out to me:

Connect with me on LinkedIn

Read my technical blog on Medium

Or send me a message by responding to this post

Is there a concept you would like me to cover in a future issue? Let me know!